Data centers are among the most HVAC-intensive building types in the world. Unlike standard commercial offices, where comfort cooling is the primary goal, HVAC systems in data centers are designed to protect information technology (IT) hardware from overheating and failure. The stakes are extremely high: according to the Ponemon Institute’s 2023 report on data center outages, the average cost of downtime is $9,000 per minute, with major outages surpassing several hundred thousand dollars. HVAC failures are a leading contributor to these incidents.

Server racks generate enormous heat loads relative to floor area. Standard commercial buildings may have cooling densities of 50–100 W/m², while data centers regularly exceed 1,000 W/m², and high-performance computing facilities can reach 50 kW per rack or more. This concentrated heat means HVAC systems must deliver precise, reliable, and continuous cooling — often 24/7/365 — with little to no tolerance for downtime.

Beyond reliability, energy efficiency is equally critical. The Uptime Institute estimates that cooling systems account for 30–40% of total energy use in typical data centers. With operators under pressure to reduce Power Usage Effectiveness (PUE), HVAC system optimization is a central strategy in both cost reduction and sustainability efforts. The difference between a PUE of 1.6 (industry average) and 1.2 (best-in-class) can translate into millions in annual energy savings for hyperscale facilities.

In short, HVAC in data centers is not just about comfort or compliance — it is about mission continuity, financial resilience, and regulatory performance.

Cooling Load Calculations in Data Centers

Unlike typical commercial projects where loads are based on occupancy, lighting, and envelope performance, cooling loads in data centers are almost entirely driven by IT equipment. Each server rack, switch, and storage array translates directly into thermal load. Because of this, accurate IT load forecasting is the foundation of HVAC design.

ASHRAE Technical Committee 9.9, which publishes the Thermal Guidelines for Data Processing Environments, defines acceptable temperature and humidity ranges for IT equipment. The recommended thermal envelope (Class A1–A4) guides supply air temperature setpoints, humidity bands, and allowable excursions. Most modern servers tolerate supply air temperatures up to 27°C (80.6°F), but operating at the higher end of the range increases energy efficiency by reducing chiller demand.

Key elements in load calculation include:

- Rack density: Higher density deployments require in-row or liquid cooling instead of traditional perimeter systems.

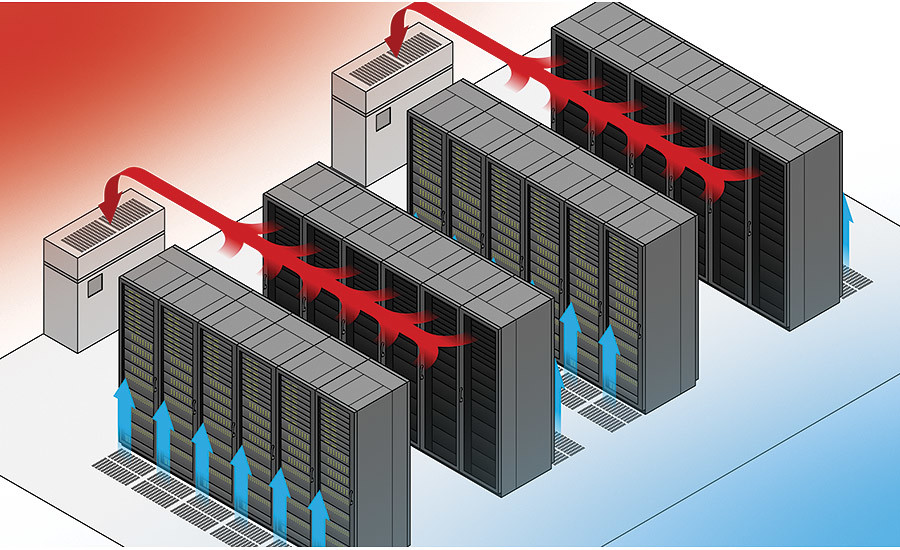

- Hot aisle/cold aisle containment: Containment strategies significantly influence return air temperatures and cooling efficiency.

- Diversity and redundancy planning: Loads are rarely uniform; CFD (Computational Fluid Dynamics) modeling is often used to simulate airflow distribution and identify hotspots.

- Growth forecasting: Data centers expand IT load over time, so cooling systems must be scalable. Undersizing leads to overheating risks; oversizing drives energy waste.

For large facilities, engineers increasingly use dynamic modeling tools that account for real-time IT load variability and simulate the interaction between cooling systems and server heat output. These models not only support compliance with ASHRAE 90.4 energy standards but also allow predictive adjustments for load spikes.

Redundancy and Reliability Strategies

Downtime in a data center is unacceptable, which is why HVAC systems are designed with redundancy at their core. The ANSI/TIA-942 data center standard outlines Tier I through Tier IV classifications, each corresponding to increasing levels of availability and fault tolerance. HVAC redundancy strategies must align with the required Tier rating:

- N: No redundancy. A single system meets the full load; failure results in downtime. Rarely acceptable for modern data centers.

- N+1: One additional unit is installed beyond the required number, allowing maintenance or failure without service interruption.

- 2N: Complete duplication of HVAC capacity and distribution paths. Each side can independently handle the full load, ensuring continuous uptime.

- 2N+1: The most robust design, with two fully redundant systems plus an extra unit. Typically found in Tier IV facilities.

Choosing the appropriate strategy is a balance between risk tolerance, cost, and efficiency. For example, financial institutions and hyperscale cloud providers often demand 2N or higher, while smaller colocation facilities may design around N+1 to reduce capital expense.

Redundancy also extends to power supply for HVAC systems. Uninterruptible Power Supplies (UPS) and backup generators are required to maintain cooling during utility outages. In some cases, even the chilled water distribution system is looped or duplicated to prevent single points of failure.

Cooling System Options for Data Centers

Data centers employ a range of cooling technologies, selected based on rack density, climate, and regulatory requirements.

Computer Room Air Conditioners (CRAC)

CRAC units are self-contained systems that cool and recirculate air within the white space. They typically use direct expansion (DX) refrigeration and are common in smaller facilities.

Computer Room Air Handlers (CRAH)

CRAH units rely on chilled water supplied from a central plant. They offer higher efficiency and scalability than CRAC units, particularly in large facilities. Variable-speed fans and chilled water valves allow precise load matching.

In-Row and Close-Coupled Cooling

As rack densities rise, perimeter cooling becomes insufficient. In-row units sit directly between server racks, delivering cold air where it is needed most and capturing hot exhaust immediately. This reduces bypass airflow and hotspots.

Liquid Cooling

For high-performance computing (HPC) and AI workloads, liquid cooling is increasingly essential. Options include direct-to-chip cooling, where coolant circulates through cold plates mounted on processors, and immersion cooling, where entire servers are submerged in dielectric fluids. While more complex, these methods handle extreme heat loads beyond the capability of air cooling.

Economizers and Free Cooling

In climates with cool outdoor air or favorable water conditions, data centers can leverage air-side or water-side economizers to reduce mechanical cooling hours. This significantly improves energy performance and lowers PUE. Some hyperscale operators in northern Europe operate with free cooling for more than 60% of the year.

Hybrid Systems

Many facilities combine multiple approaches — for example, CRAHs with chilled water for baseline load and liquid cooling for HPC racks. The key is flexibility: as IT loads evolve, cooling strategies must adapt without requiring complete system replacement.

Energy Efficiency and Data Center Performance

Data centers consume vast amounts of energy, and cooling systems account for a major share. Regulators around the world now require operators to prove that their facilities are running efficiently. Instead of focusing only on equipment specifications, energy codes for data centers evaluate how the entire cooling and electrical system performs under different load conditions.

The key metric here is Power Usage Effectiveness (PUE). It measures the ratio between the total energy a data center consumes and the portion used by IT equipment. A perfect score would be 1.0, but most facilities average around 1.5, while best-in-class centers operate below 1.2. Cooling design has the biggest impact on this number because air conditioners, fans, pumps, and chillers run constantly.

Designers can improve efficiency — and comply with regulations — by:

- Using variable-speed fans and pumps that adjust automatically to server demand.

- Separating hot and cold air pathways to prevent wasted energy.

- Taking advantage of free cooling, where outside air or water can be used instead of mechanical chillers.

- Raising supply air temperature slightly (while staying within safe limits for servers) to reduce compressor work.

- Installing smart controls that fine-tune operation based on real-time IT load.

In some U.S. states, such as California, building permits for data centers require detailed energy modeling. In Europe, efficiency targets are enforced under energy directives. The direction is clear: data centers must not only be reliable but also continuously prove that they operate with minimal energy waste.

Environmental and Compliance Considerations

Cooling systems are also affected by environmental regulations.

- Refrigerants: The gases used in air conditioning and cooling systems are being phased out if they have high global warming potential. Newer alternatives are being adopted, but they are sometimes more flammable, which changes how systems must be designed and monitored.

- Water usage: Data centers using water-based cooling systems must comply with stricter water conservation rules, especially in regions facing shortages. Many operators now report a “Water Usage Effectiveness” score alongside energy performance.

- Air quality for staff: Even though the main server halls are not occupied, support areas like control rooms still need adequate ventilation and filtration to protect employees.

- Fire and safety: Cooling pipes, electrical systems, and ducts running through multiple floors must be installed in ways that slow the spread of fire and allow safe shutdowns in case of emergency.

Together, these rules ensure that data centers are not only efficient but also safe for workers and environmentally responsible.

Monitoring, Controls, and Smart Integration

Modern data centers cannot rely on manual monitoring of cooling systems. Regulations and industry standards now require continuous tracking of performance, energy use, and safety conditions. This means HVAC systems must connect directly to building management platforms and data center monitoring software.

Sensors placed throughout the facility measure temperature, humidity, air pressure, and refrigerant levels. These sensors feed into centralized dashboards where operators can see real-time conditions and respond to issues before they escalate. Advanced systems use predictive analytics to forecast where hotspots might develop or when equipment is likely to need maintenance. This approach reduces the risk of unexpected failures and helps maintain compliance with efficiency and safety rules.

Leak detection and automated alarms are particularly important when low-GWP refrigerants are used, since many of the new options are mildly flammable. Automatic shutdown or ventilation can be triggered by the monitoring system if a leak is detected, ensuring both safety and compliance.

Best Practices for Reliable and Compliant Systems

Designing HVAC for a data center requires a careful balance: reliability, efficiency, and compliance must all be achieved at once. Industry best practices include:

- Plan early: Energy codes, cooling load forecasts, and redundancy strategies should be addressed in the concept phase, not left until inspections.

- Document thoroughly: Diagrams, cooling capacity calculations, and airflow models are often required for approvals and future audits.

- Commissioning and testing: Every new data center should undergo detailed commissioning to ensure cooling performance meets both design intent and code requirements.

- Prepare for the future: As IT loads increase and regulations tighten, scalable and adaptable cooling designs prevent costly retrofits.

For additional guidance on designing compliant HVAC systems, resources from experienced contractors such as Lightning Mechanical can provide practical insights into applying codes during real-world projects.

Conclusion – Building Future-Ready Data Center Cooling

HVAC systems in data centers are more than just mechanical infrastructure; they are the backbone of reliability and efficiency. With regulations tightening around energy use, refrigerants, and monitoring, design teams must take a proactive approach.

By combining accurate load forecasting, redundant system architecture, efficient cooling technologies, and smart integration, operators can achieve both compliance and performance. Ultimately, building codes and technology regulations are guiding the industry toward data centers that are safer, greener, and more reliable — a win for both business continuity and sustainability.